the execution time of my query is dominated by filtering/sorting the parent.250-350μs),īut I guess that will rarely be a real problem because - at least in my case Choose the Query Tool by performing a right-click on the database name. Open pgAdmin and build the database you want. Follow the below steps to create a database. Shows that the aggregated version is 50-100% slower (150-200μs vs. PostgreSQL offers two types for storing JSON data: json and jsonb. Before jumping to the Query JSONB array of objects in PostgreSQL, we need a database. The explain analyze output for both queries (on a trivially small test set) Is already correctly typed as a list of dictionaries. When the query is executed with sqlalchemy, children in the resulting rows

birthdate )) as children FROM parents p JOIN children c USING ( parent_id ) GROUP BY 1, 2 parent_id | name | children name, array_agg ( json_build_object ( 'child_id', c. So my final query looked like this: SELECT parent_id, p. Which takes a sequence of keys and values and creates a JSON object from it.

Which aggregates all values into an array. Put together all the children of one parent into an array.” Into it’s object structure, I thought, “It would be nice to let the database Just before I started writing the logic to pull apart the result and put it SELECT * FROM parents JOIN children USING ( parent_id ) ORDER BY parent_id parent_id | name | child_id | name | birthdate That constructs parent objects with their respective children.

#Postgresql json query code

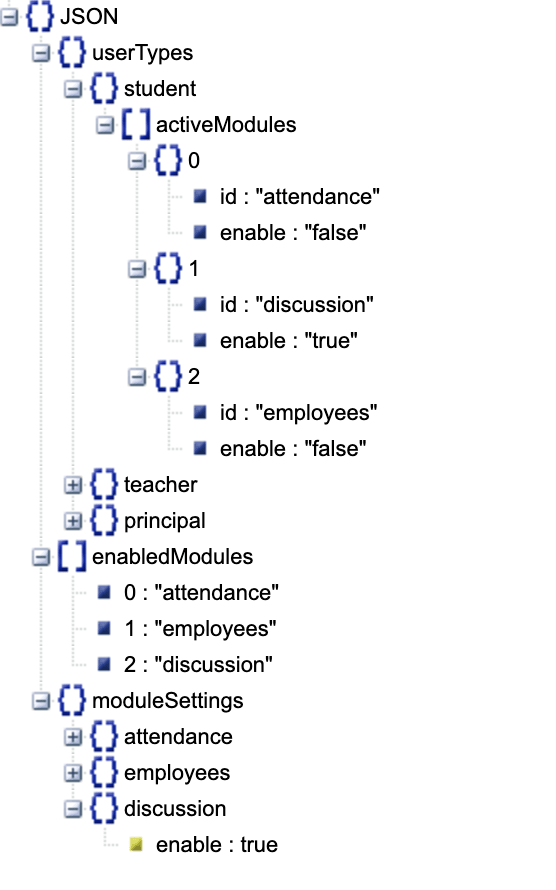

Without any further thinking I would do a query like this and a bit of code More control over the queries (rather complex logic with LIKE & sorting).īut the filtering/sorting is not the topic today, so I will leave it out inįor illustration, let’s assume the following schema: CREATE TABLE parents ( parent_id INTEGER PRIMARY KEY, name VARCHAR ) CREATE TABLE children ( child_id INTEGER PRIMARY KEY, name VARCHAR, birthdate DATE, parent_id INTEGER, FOREIGN KEY ( parent_id ) REFERENCES parents ) I decided not to use the ORM for the featureīecause it will be one of the hottest paths of the application and I wanted Weight, ok := dimensions.On client project I have to do a search in a structure of tables and return Log.Fatal("unexpected type for dimensions") be useful to filter for a specific key/value pair like so: Use to check if the JSONB column contains some specific json. SELECT * FROM items WHERE attrs->'ingredients' ? 'Salt' The ? operator can also be used to check for the existence of a specific SELECT * FROM items WHERE (attrs->'dimensions'->'weight')::numeric 'dimensions' ? 'weight' SELECT * FROM items WHERE attrs->'name' ILIKE 'p%' You can use the returned values as normal, although you may need to type Or you can use -> to do the same thing, but this returns a TEXT value SELECT attrs->'dimensions'->'weight' FROM items The -> operator is used to get the value for a key. Create an index on a specific key/value pair in the JSONB column.ĬREATE INDEX idx_items_attrs_organic ON items USING gin ((attrs->'organic')) Create an index on all key/value pairs in the JSONB column.ĬREATE INDEX idx_items_attrs ON items USING gin (attrs) lowercase `true` and `false` spellings are accepted. You can insert any well-formed json input into the column. Here's a cribsheet for the essential commands: - Create a table with a JSONB column. The PostgreSQL documentation recommends that you should generally use JSONB, unless you have a specific reason not too (like needing to preserve key order). JSONB also supports the ? (existence) and (containment) operators, whereas JSON doesn't. It may change the key order, and will remove whitespace and delete duplicate keys. This makes it slower to insert but faster to query. JSONB stores a binary representation of the JSON input.JSON stores an exact copy of the JSON input.PostgreSQL provides two JSON-related data types that you can use - JSON and JSONB.

0 kommentar(er)

0 kommentar(er)